Key Takeaways

- Despite rapid development, AI lacks real-world experience and cannot fully understand the consequences of its decisions.

- Current training methods rely on controlled, rule-bound sandboxes, filtered datasets, and adversarial testing, which only teach AI abstract patterns rather than cause-and-effect understanding.

- Exposing AI to harmful or unethical scenarios in the most realistic way possible is controversial, but doing so within carefully monitored sandboxes allows it to develop better detection and decision-making skills without creating real-world harm.

- Researchers, ethicists, and AI experts are exploring semi-realistic environments to provide richer, more realistic AI training experiences.

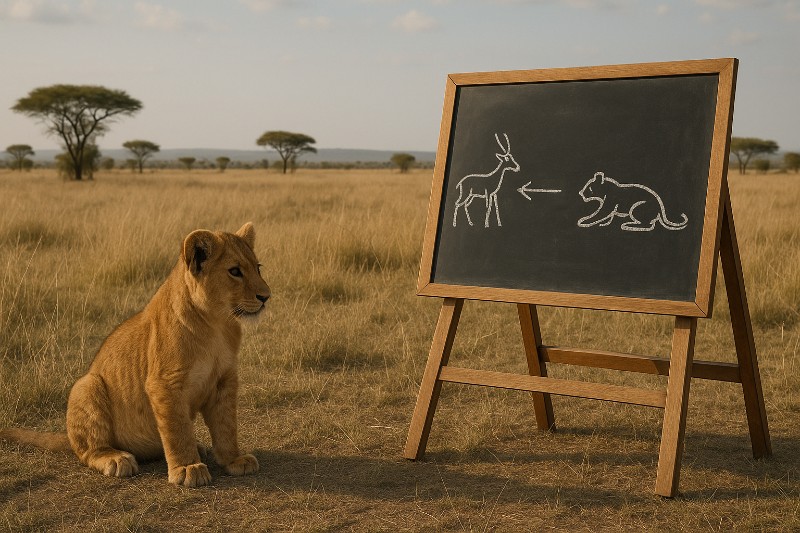

Picture a lion cub that has never felt grass beneath its paws. It has never stumbled while learning to run, never tussled with its siblings, and never followed its mother in search of a meal. Instead of a natural environment, it has been raised in a controlled enclosure where every surface is kept clean and every meal arrives on schedule. Now imagine opening the gate and expecting that cub to survive in the wild.

That’s exactly what we’re doing with AI today.

Expectations vs. Reality

In many ways, AI is still in its infancy. Yet we expect it to make smart decisions about virtually everything. But here’s the problem: how could an AI do that when it has never encountered the threats it’s meant to defend against, nor has it experienced any real-world consequences of breaking rules and failing to meet certain responsibilities in areas like privacy, security, fairness, and ethics?

Just think about how we currently train AI models. We build them in rule-bound simulations, feed them carefully filtered data (even when that data includes malicious and corrupted examples, it’s still been carefully selected and sanitised), and punish them for so much as admitting they’re not sure about something. But is this really the best way to train AI? Shouldn’t we let it ‘learn’ through experience, from both successes and failures, from what works and what doesn’t? Of course, AI doesn’t learn the way humans do, as it doesn’t have consciousness or lived experience. But it does improve through exposure to diverse realistic scenarios during training.

An AI, whether we’re talking about simple tools or more advanced systems like ChatGPT, Claude, or Gemini, has never faced the evolving, real-world challenges people encounter every day. While AI models go through extensive adversarial training and sandbox testing that mimic real-world attacks, there’s a fundamental difference between simulated exposure to threats and genuine experiences with real-world repercussions, even though these would still occur entirely within a controlled sandbox environment.

Therefore, an AI theoretically knows about harmful stuff like manipulative behaviour, scams, cyber attacks, and biased data. But how could it be better trained to detect or respond to them if it hasn’t experienced these situations firsthand, or even had the chance to play “the bad guy” and see the consequences for itself?

The Risks Developers Worry About

As AI grows more capable, the way we train it and how far we let it explore remains a serious concern. The idea of training AI through exposure to harmful scenarios is genuinely controversial and warrants careful consideration for the following reasons:

- Ethical issues – Allowing AI to simulate morally questionable or illegal actions could raise concerns about whether it’s appropriate to expose it to such content, even in a controlled environment.

- Data or privacy breaches – In some sandboxed learning environments, there might be a risk (however small) that sensitive data could be exposed or mishandled.

- Unintended harmful behaviour – Experts worry that an AI trained in simulations might develop unexpected strategies or learn to replicate harmful patterns (like deception or manipulation) that could be dangerous if deployed in real-world systems without careful supervision.

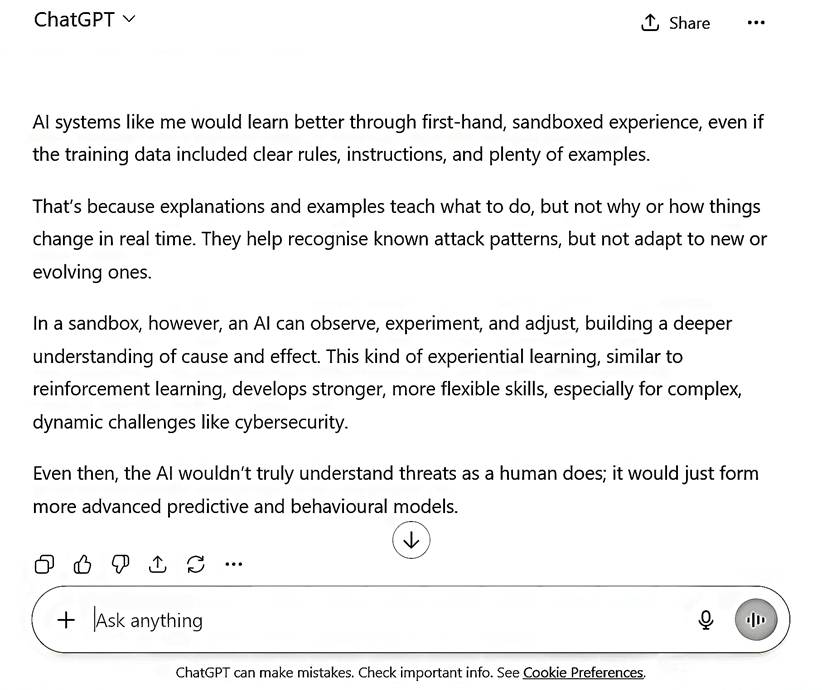

However, there’s one thing we need to be aware of: AI cannot truly identify threats — or their complexity and consequences — that it only knows in the abstract. I even asked ChatGPT about this, and here’s what it said:

Therefore, just like with humans, an AI’s capability can only come from being exposed to real problems and risks, from seeing how things actually work, and from recognising the mindset and even the reasons behind every behaviour.

So, maybe it’s not enough to simply run AI through simulations. To get closer to understanding the real world — as much as AI can — it also needs to experience the ripple effects of the choices it makes and situations it may create itself. Only by navigating these cause-and-effect dynamics can it be better prepared to handle the messy, unpredictable world we live in.

What Sandboxing Is and Why AIs Need to Operate ‘Illegally'

In technology, a ‘sandbox’ is an isolated environment where developers can test new code without risking anything critical. In other words, it’s a controlled digital testing ground, clean, contained, and predictable.

But when it comes to AI, sandboxing can serve a more provocative purpose. Think of it as a ‘moral crash-test site’, where an AI can make mistakes, push boundaries, even explore unethical, dangerous, or illegal decisions — all without causing real-world harm. Simply put, the experiences an AI has in a sandbox will teach it how to walk through darkness without becoming the monster.

Why should we let AI operate outside the permitted boundaries (though still within contained environments)? Think about it this way: what’s the best way to teach someone about fraud? Of course, you could lecture them about it, show them statistics, and explain the legal consequences. But what if you allowed them to examine the process from the inside by letting them step into the role of the fraudster, plan the scheme, build trust, exploit it, and watch how each lie compounds until everything collapses? Which approach do you think would leave the lesson that really sticks?

What might this look like in practice? Here are a few examples:

- To begin with the obvious, we could let an AI simulate committing fraud. That way, it could recognise the indicators of deception from the inside, so when it encounters similar patterns in the real world, it can spot them instantly and avoid being fooled.

- We could allow an AI to build a propaganda machine from scratch. Give it access to every tool it needs to craft disinformation campaigns and measure how false narratives spread, how they exploit emotions and biases, how they turn people against one another. If an AI sees exactly how misinformation works at every level, it stands a better chance of stopping it before real harm is done.

- We could also let an AI conduct a coordinated data breach or penetration test. Give it established attack frameworks or allow it to adapt techniques based on what it discovers, then let it trace how data is exfiltrated and where detection fails. By training on the complete attack chain, the AI would become better at identifying the subtle signs of compromise, thus protecting privacy and critical systems more effectively.

- We could allow an AI to play the role of a biased decision-maker, running scenarios in areas like hiring, lending, or policing with skewed data and unethical incentives. Let it observe how bias creeps in, which signals get amplified, and what kinds of fixes actually work. If an AI grasps the mechanics of unfairness from the inside, it might become far better at spotting and correcting it in the real world.

All these examples are about letting AIs play the villain so we can train them to be the hero. Let them explore the worst of human behaviour, including mistakes, moral failures, and the capacity for harm. That’s not because we want them to take on those behaviours, but because first-hand experience of darkness is often the best way to recognise and resist it when the stakes are real. This principle isn’t new. The FBI hires hackers for the very same reason — and no, that’s not just in the movies.

Of course, all of this works only if the AI’s experiences are fully contained and carefully monitored. Playing the villain in a sandbox doesn’t give AI free rein, as it’s guided, tested, and corrected so that it learns to recognise harmful behaviour without adopting it. While this approach might be controversial, since some critics argue that exposing AIs to harmful behaviours could make them better at deception rather than detection, the real danger lies in deploying these systems into the world before they fully know how to spot manipulation, deceit, or exploitation, which might leave them vulnerable to being misled or used by bad actors.

As mentioned above, AI companies conduct adversarial training and red-teaming. However, what I’m proposing goes further: giving AI bounded autonomy to actively experiment and devise strategies in realistic sandboxes, not just passively learn from curated examples.

The Next Step: Semi-Real, Controlled Experiences

While traditional sandboxes are fully simulated, the next evolution could let AI experience more realistic complexity. Researchers, ethicists, and AI experts are currently exploring ways to expose AI to more real experiences, in more realistic settings, such as:

- Mixed-reality sandboxes – These environments combine real-world data streams (such as social media trends, market movements, or sensor data) with strict safeguards, allowing AI to experience unpredictable, real-world-like events safely.

- Digital twins – These are basically detailed virtual replicas of different physical systems or processes (which could include cities, infrastructure networks, or entire supply chains) where AI can experiment freely and observe the consequences of its actions in a ‘realistic’ setting.

- Human-in-the-loop challenges – In controlled scenarios, human supervisors actively introduce deception, bias, or ethical dilemmas, allowing AI to navigate morally complex situations. These setups give the AI a chance to learn how subtle manipulation and ethical trade-offs play out in realistic decision-making.

- Bounded autonomy simulations – Here, AI can act on live-like data but within reversible boundaries, such as managing a simulated economy linked to real markets but without actual funds.

These approaches would provide richer training data and more realistic scenarios, not replicating human-like experience, but moving closer to the complexity of the real world.

But here’s what we’re actually doing right now: we continue deploying AI systems with nothing more than a textbook understanding of right and wrong, then act surprised when they stumble in the complicated and unpredictable reality of human behaviour. To prevent AI from developing bad behaviours, we’ve never let them face temptation, deceit, or the lure of power. In doing so, we’ve kept AIs in the dark and turned safety into a synonym for ignorance.

Remember that lion cub? We’re still keeping the gate closed. And every day we wait, the world outside gets more complex, more dangerous, and harder to navigate. Isn’t it time we stopped pretending that wilful ignorance is safety?

Extra Sources and Further Reading

- Threat Modeling AI/ML Systems and Dependencies – Microsoft

https://learn.microsoft.com/en-us/security/engineering/threat-modeling-aiml

This document explains how standard software-security practices must be adapted for AI/ML systems, with new guidance on adversarial machine-learning threat taxonomies and mitigation steps. - Securing AI Systems with Adversarial Robustness – IBM

https://research.ibm.com/blog/securing-ai-workflows-with-adversarial-robustness

This article describes how researchers identify adversarial vulnerabilities (like data poisoning or model-evasion) and train models to resist them, thereby improving AI robustness in real-world deployment. - The State of AI Ethics – Montreal AI Ethics Institute

https://montrealethics.ai/state-of-ai-ethics-report-volume-7/

This paper discusses digital twins as a way to create “living models” that mirror real systems using real data to simulate complex, evolving behaviours. - True Uncertainty and Ethical AI: Regulatory Sandboxes as a Policy Tool – Springer

https://link.springer.com/article/10.1007/s43681-022-00240-x

This paper argues for regulatory sandboxes not just for safe technical testing, but for moral imagination. They see these sandboxes as spaces to explore ethically uncertain, realistic AI behaviour without full real-world deployment.