Key Takeaways

- AI didn’t eliminate copyright uncertainty — it amplified it. What used to be a grey area has turned into a legal and ethical fog with no clear boundaries.

- AI “originality” is still up for debate. What a model generates may feel new, but it often sits somewhere between remix, inspiration, and imitation.

- Risk exists even without bad intent. Copyright issues can arise even when creators act in good faith and believe their AI-generated content is original.

- Responsibility ultimately rests with humans. AI tools don’t understand ownership, consent, or consequences, so the person publishing the work is accountable.

- Using AI safely is less about rules and more about awareness. To reduce legal risk, creators need to review outputs for copyright or plagiarism issues, add meaningful human input, and ensure proper licensing.

Copyright has always been a bit of a grey area. Even before AI entered the picture, we were arguing about what counts as fair use and what crosses the line. Then, AI rolled in and turned that grey area into a thick fog.

Now we’re not just debating whether using a few seconds of a song in a video is legitimate; we’re questioning whether training an algorithm on millions of copyrighted works is legal or even ethical. And whether what AI generates is actually a remix of existing content, original creation, or something we don’t have words for yet.

When it comes to AI-generated content, nobody really knows who owns what or what counts as original. That uncertainty creates real risk. Given all this, it’s only logical to ask ourselves: how risky is it, really, to use AI-generated content? To answer this question, here are a few facts that paint a pretty eye-opening picture:

- As of late 2025, legal analysts estimate that more than 50 AI‑related copyright infringement lawsuits have been filed against major AI developers in U.S. federal courts over allegations of using copyrighted material without permission.

- The New York Times alleged that OpenAI and Microsoft copied millions of Times articles without permission to train their LLMs, with the lawsuit seeking billions of dollars in damages.

- Getty Images filed lawsuits in both the US and UK, accusing Stability AI of using millions of copyrighted photos from its database without permission in order to train its image generator, Stable Diffusion. The UK case concluded in November 2025, with Getty largely failing to win on its main copyright claims, though the court did rule in their favour on some trademark infringement issues.

- A survey of artists worldwide found that nearly 90% feel existing copyright laws fail to protect them from the impact of AI tools. Among the artists surveyed, almost three-quarters view the online scraping of creative work for AI training as unethical and expect financial compensation. About half of them also want recognition as the original creator when their work is used for AI training.

- Approximately 60% of marketers who incorporate different AI solutions into their work are worried about potential harm to their brand reputation caused by plagiarism, bias, or content that is inconsistent with their values.

- According to a 2025 article in the Journal of Intellectual Property Law & Practice, many AI developers don’t preserve internal documentation for the data used to train their models because they’re concerned those records could be used as evidence of copyright infringement or data protection violations.

Considering all of the above, it’s no wonder that many people think twice before using AI-generated content. And that caution is entirely justified.

Every Time You Hit “Generate”, You’re Rolling the Dice

In a previous blog post, we explored how AI-related copyright issues can arise. But here’s a shocking fact: every time you use an AI tool to generate content, whether it’s text, images, or music, there’s a chance it could accidentally infringe someone else’s copyright.

Even that “totally original” copy, image, or song your AI has just produced might be tipping its hat to a creator you’ve never heard of. Because here’s the thing: AI doesn’t create from nothing. To work its magic, it had to learn from existing content, so even if the output seems original, there’s always a risk it resembles someone else’s work just a bit too closely.

I’ve seen this happen countless times: sellers generate what seems like a perfectly original image, publish it for sale, and two weeks later get a takedown notice because it is eerily similar to an existing artwork. Creators who don’t know how to use AI to produce unique content routinely face takedown notices or even lawsuits when their AI-generated work inadvertently copies someone else’s work. They have no idea. The AI has no idea (because, well, it’s not sentient). But that doesn’t matter.

So, if the idea of using AI-generated content makes you nervous because you’re not sure it’s truly original, I totally get it. But that doesn’t mean you should avoid AI entirely. Because that’s not realistic, not in the age of AI, anyway. The only thing you need to do is be smart about it.

I’ve been playing with AI long enough to figure out what works and what can get you in trouble. Here’s what I’ve learned (sometimes the hard way).

When Using AI to Write Articles, Blog Posts, and Social Media Posts…

- Stop asking AI to impersonate specific writers: When you craft prompts like “write in the style of [writer’s name]”, you’re asking for trouble. That’s because you’re basically instructing the AI to mimic someone’s creative fingerprint. Instead of doing that, describe the mood or emotions you’re after and let the AI work from there. That way, you’ll get something more original, which may one day save you from having to explain to a lawyer that you were just “testing a prompt”.

- Treat AI like a Co‑Writer, Not a Ghostwriter: AI is fantastic at sparking ideas, drafting outlines, or giving you a rough first draft. But handing it the reins to produce a full article that you can just copy and paste as is is like letting a robot cook a gourmet meal without supervision. While you might end up with something edible, it probably won’t be what you’d expect. When it comes to writing, even the most advanced LLMs can’t fully capture all the subtle nuances of human language and still struggle with that dry, AI-like tone that’s all too easy to spot. The best approach I’ve found is to write the piece myself first and then use AI to refine and improve it.

- Edit, rewrite, and sprinkle your personality over every section: If you’re generating text with AI and want it to be genuinely original and stand out, don’t just rely on swapping a few words here and there. Dive in, reshape ideas, and add examples only you could come up with. Think of it as leaving a personal fingerprint on your work, imprinting your voice by throwing in your quirks, your perspective. Why? Because while an AI might hand you some brilliant raw material, you’re the one who needs to turn it into a masterpiece. And yes, that sometimes means heavy editing, rewriting, and maybe even a coffee (or two) for inspiration. At the end, if your article still sounds like it was written by “HelpfulBot 3000”, it probably needs another pass. Or two.

- Add your own research: AI can be a valuable research assistant, but it’s not exactly rigorous about sourcing. To make your content more trustworthy, dig deeper than what AI provides, back up your points with specific case studies, and verify facts. That’s what gives your content credibility. Back when I was just starting with AI and didn’t realise it could hallucinate, I almost published something with a statistic that turned out to be completely made up — not because the AI lied, but because it had learned from content that was wrong. Thankfully, double-checking saved me from looking like an idiot.

- Run everything through plagiarism detectors (multiple ones): Even if you wrote every word yourself, do this. I use Grammarly, Quetext, SmallSEOTools, and Copyscape. Yes, that’s overkill, but even the best checkers miss things. Although I haven’t copied a single line in my life, I’ve caught plagiarism a few times — as a sweet reminder that great minds not only think alike, but sometimes write alike too, using the exact same words and sentence structures.

When Using AI to Generate Images…

Never name specific artists or works in prompts: Just don’t. It doesn’t matter if you want “something Picasso-esque” or “in the style of Banksy.” Using artist’s names risks producing something too close to their work, and that’s a copyright nightmare waiting to happen. To avoid this, focus on describing the moods, themes, or general styles you’re after.

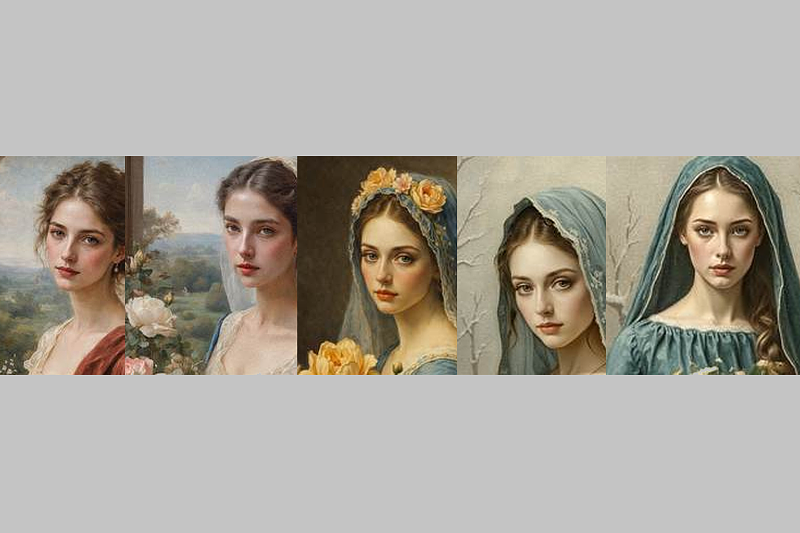

For example, I wanted something that felt like Bouguereau’s classical paintings without actually copying him. So, I prompted:

“Classical academic oil painting of a young woman holding a bouquet of roses, with porcelain-smooth skin, soft natural light, refined features, and a calm, timeless expression. Rich harmonious colours, detailed fabric and veil, delicate floral realism, balanced composition, romantic 19th-century European atmosphere”.

No copyright issues, and I got exactly the aesthetic I wanted. Take a look at the results:

- Save your prompts: Seriously, keep them somewhere. Add them to the image metadata if you can, or at minimum, stick them in a project file. If someone ever questions whether you created something or stole it, having a record of your creative process is your best defence. Plus, it makes creating variations later infinitely easier since you don’t have to start from scratch.

- Don’t use other people’s images as references: I know some tools let you do this, and yes, it’s tempting when you want “exactly like this but different.” However, using someone else’s work as a reference image can create serious copyright problems. If the AI reproduces elements too closely, you’re in trouble.

To test this, I generated an image (fig. 1), then used it as a reference to create a variation with a different background (fig. 2), and finally created a third version from a text prompt alone (fig. 3). As you can see, the version created based on the uploaded reference looks way too similar to the original, while the text-only version is genuinely distinct.

(generated with ChatGPT)

with new background (GenCraft)

no original image uploaded (GenCraft)

- Use reverse image search: Before you publish any AI-generated images, make sure you run a reverse check. Tools like Google’s Reverse Image Search, TinEye, Yandex, Lenso, Copyseeker, Proofing AI, and PicDefense are your friends here. They’ll flag if your “original” creation looks suspiciously like something already online. While these services won’t replace human judgment, they act like a spellcheck for pixels, catching potential “evil twins”.

When Using AI to Generate Music…

- Stick to licensed sound libraries: For backing tracks, samples, or loops, rely on reputable platforms, such as Epidemic Sound, AudioJungle, or Artlist, which provide proper commercial licenses. Yes, they cost money, but a copyright infringement lawsuit will cost you far more.

- Add your own creative layers: One way to make AI-generated music more unique is by recording live instruments, vocals, or additional beats over the AI track. Add vocals. Throw in some beats. The more human elements you include, the more the track becomes genuinely yours and the less likely it is to trigger copyright issues.

- Never upload copyrighted audio as prompts: Some tools let you feed in existing songs to guide the AI. This is called “audio prompting”, and it’s a terrible idea. Why? Because you’re basically asking the AI to copy someone else’s work. So, don’t do it. Instead, start with fresh, broad genre descriptions or stylistic directions to guide the AI.

- Check for similarities before releasing anything: Before releasing a track, use tools like ACRCloud, Shazam, AcoustID, SoundsLike, Audiosocket, and Duplicate Finders to make sure it doesn’t closely resemble existing songs. While there’s still no consumer-friendly reverse song search that can scan AI-generated tracks against all music online, some AI platforms have built-in checks that flag sequences or patterns that might unintentionally replicate copyrighted material. It’s better to catch issues early than to deal with a takedown notice after your track goes viral.

Universal Tips (or How I Sleep at Night)

Regardless of what type of content you’re creating, these principles apply:

- Document everything. Keep your prompts, your edits, your revision history. This creates a paper trail showing how human input shaped the machine output. This protects you if anyone questions your work.

- Read the terms of service. I know, I know—nobody reads those. But you should. Because while some AI tools grant you full commercial rights, others restrict them heavily. Misunderstanding licensing can land you in legal trouble even if the output itself is fine. And here’s the thing: terms can change. Save a copy when you create something, so you have proof of what the rules were at that moment.

- When in doubt, ask permission. Want to reference someone’s style? Use their characters? Build on their ideas? Get written permission. It may feel old-fashioned and annoying, but it’s the safest route. And honestly? Most creators appreciate being asked rather than discovering their work was used without consent.

Needless to say, AI is an incredible tool that can spark ideas, speed up workflows, and help you create things you couldn’t have managed alone. But it’s not a magic button that makes copyright issues disappear.

That’s why you need to stay smart by checking your sources, adding your own voice and creativity, and not being shy about getting permissions when needed. Think of it this way: AI can whip up something impressive, but you’re the one who has to make sure it doesn’t replicate or echo someone else’s work…unless you enjoy awkward emails from lawyers.

Extra Sources and Further Reading

- Artificial Intelligence and Copyright – Wikipedia

https://en.wikipedia.org/wiki/Artificial_intelligence_and_copyright

This source provides a general overview of major AI‑related copyright cases and legal questions currently shaping policy. - Generative AI and Copyright Issues Globally: ANI Media v OpenAI – Tech Policy Press

https://www.techpolicy.press/generative-ai-and-copyright-issues-globally-ani-media-v-openai/

This article explains international copyright disputes involving AI training data and how courts around the world are handling them. - AI Covers: Legal Notes on Audio Mining and Voice Cloning– OUP Academic https://academic.oup.com/jiplp/article/19/7/571/7616207

This article explores how AI music and voice cloning raise unique copyright and legal concerns from a scholarly perspective. - U Can’t Gen This? A Survey of Intellectual Property Protection Methods for Data in Generative AI – arXiv

https://arxiv.org/abs/2406.15386

This study examines intellectual property protection methods and challenges related to generative AI training data. - Artificial Intelligence and Intellectual Property: Challenges, Litigation, And Future Directions – Mondaq

https://www.mondaq.com/unitedstates/new-technology/1724036/artificial-intelligence-and-intellectual-property-challenges-litigation-and-future-directions

This article explores the emerging legal challenges and litigation involving artificial intelligence and intellectual property, including patent, trade secret, trademark, and copyright issues, and discusses how economic analysis could shape future IP disputes in the age of AI.