Key Takeaways

- When AI models learn primarily from synthetic or self-generated data, they begin to reinforce the same errors, biases, and narratives instead of correcting them, laying the groundwork for information monoculture and increasing the risk of information decay and model collapse.

- Temporal misalignment allows past assumptions and debunked claims to persist in AI outputs, creating the illusion of up-to-date knowledge while recycling historical narratives.

- As people increasingly rely on AI to summarise, judge, and verify information, critical thinking, independent reasoning, and knowledge creation begin to atrophy.

- Shared models and datasets create cognitive single points of failure, where one blind spot can cascade across most sectors at machine speed.

- AI standardisation can support innovation if humans remain active, critical participants; without that engagement, monoculture hardens into structural collapse.

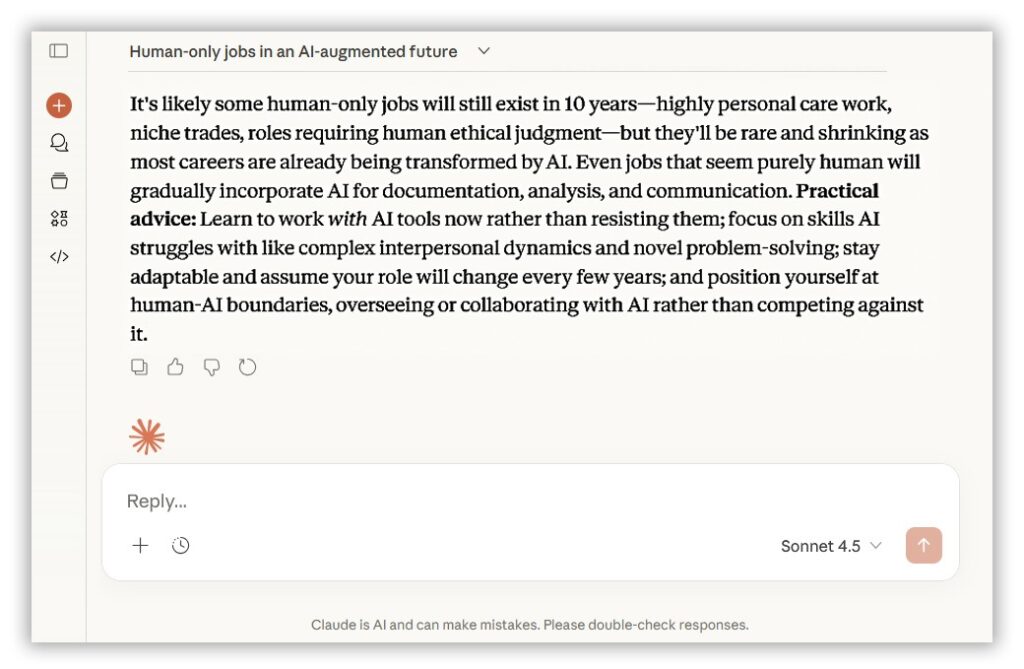

Have you ever asked an AI whether humans will still have jobs in 10 years? No? Well, I did. I asked Claude, and here’s what it said:

That’s more or less the kind of answer any AI would give today.

Now imagine an AI system trained primarily on AI-generated answers rather than human, reality-anchored data. Over time, it would no longer be learning from people but from AI-generated data or even its own recycled interpretations.

What we’re talking about here is a kind of “echo” that can easily turn into a dangerous recursive feedback loop. While recursive feedback is often used to help AI refine its algorithms and learning processes, it also has a dark side: once distorted narratives enter the loop, they can be reinforced repeatedly until they begin to feel like unquestionable, even unavoidable truths.

In our particular example, a model in this cycle — trained on outputs from previous models (synthetic data) as well as its own generated content (self-training) — could end up reinforcing the idea that humans are on the verge of obsolescence or even extinction. How could that happen? Simply put, when AI models are trained on other AI-generated content, each iteration can distort, degrade, and amplify errors and biases.

Another point worth mentioning here is that training AI models recursively on synthetic data can not only introduce errors and lower information quality but also shrink data diversity, increasing the risk of creating more uniform, self-reinforcing information. The risks? In short, we could see more bias, homogenisation, and even full-blown model collapse, where the AI eventually stops being useful due to “digital dementia”.

While these concerns are real, they barely scratch the surface. Beneath that, a deeper crisis is quietly unfolding across four interconnected dimensions that shape how knowledge evolves, how systems and structures operate, and even how people interact. This creates what I like to call the AI cognitive monoculture, which is essentially information monoculture.

When it comes to information monoculture, the stakes aren’t just about AI getting things wrong; they’re about what AI standardises, what it might make us forget, and how it quietly reshapes entire systems without anyone noticing. For the rest of this post, we’ll explore each dimension, why it matters, and how the very tools designed to accelerate knowledge could be slowly narrowing it instead.

The First Dimension: Time – How AI Freezes the Past

The frozen zeitgeist syndrome, as it’s sometimes called in tech circles, occurs when an AI’s understanding of the world gets stuck in past dominant narratives instead of adapting as society, knowledge, and norms evolve. In practice, it means that AI can generate outdated information, perspectives, or values as if they were still relevant today.

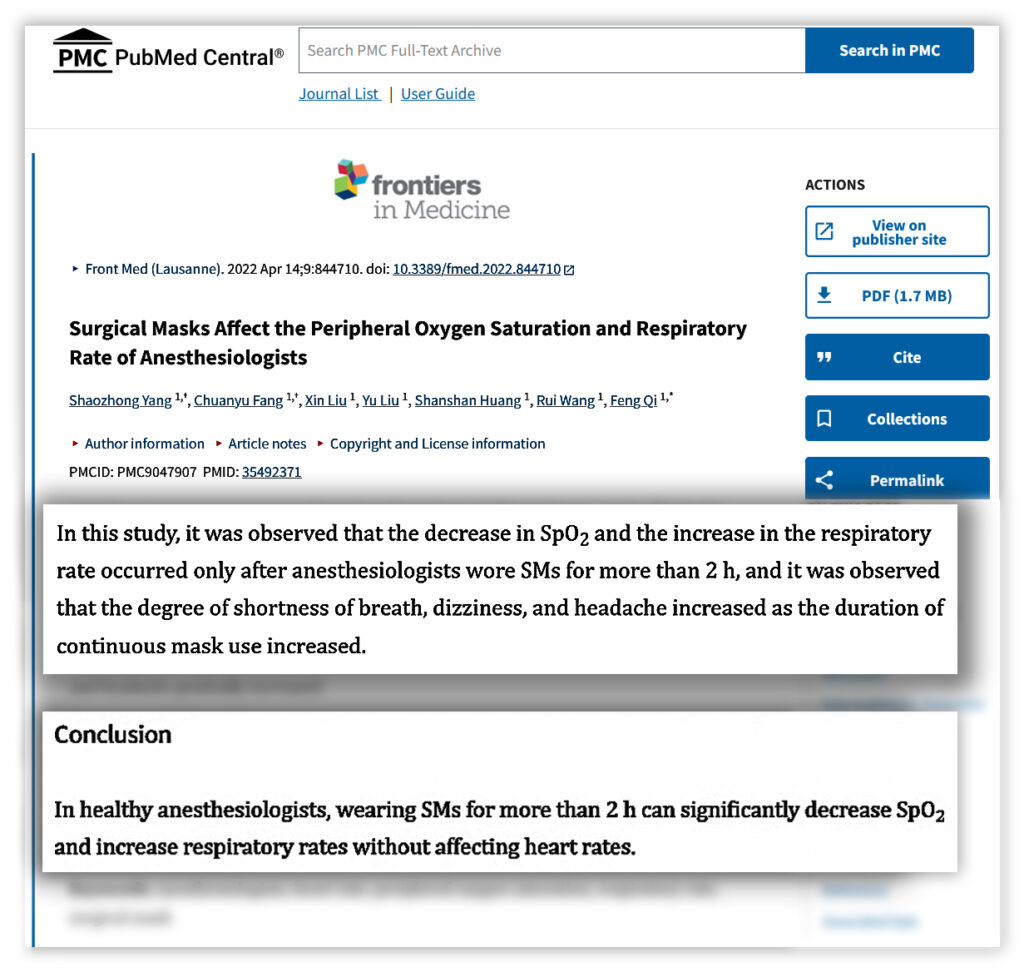

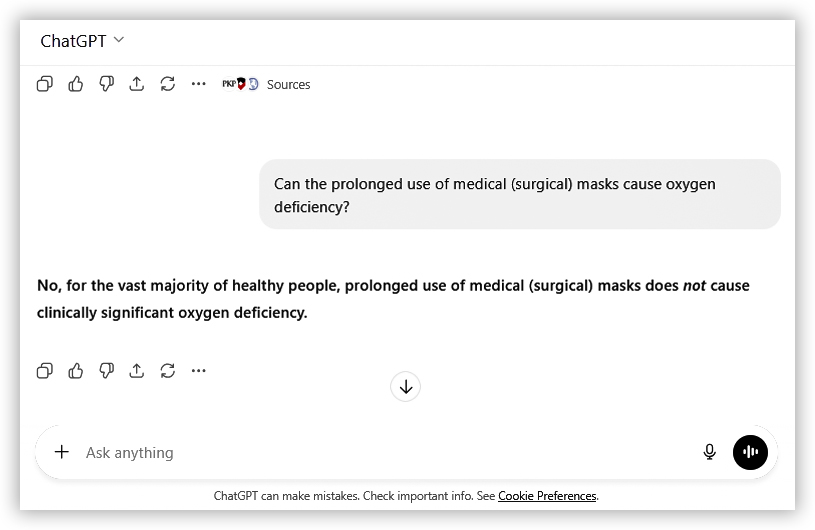

If we think back to early pandemic claims, for example, many of the beliefs circulating at the time have since been debunked. Yet even the AI models released later can still reproduce assertions from that period. Professionals refer to this as temporal opinion contamination. Below is a clear example of that.

However, recent research suggests that wearing medical (surgical) masks for long periods of more than two hours may be linked to reduced oxygen intake, faster breathing, and physical discomfort, including shortness of breath, headache, and dizziness.

Why is this important for AI? Because we’re building tomorrow’s knowledge infrastructure on temporal quicksand. Students, researchers, and decision-makers increasingly rely on AI as a trusted source. If AI remains anchored in a past snapshot, there’s a risk of human understanding becoming layered with echoes of outdated debates and irrelevant information.

Unlike technical model collapse, an AI that keeps using outdated information might function perfectly, and its outputs may be coherent, but the ideas themselves are historical artifacts pretending to be modern knowledge. And users, accustomed to trusting AI, may stop questioning what should be obvious to scrutinise.

Unfortunately, the temporal misalignment in AI systems is just the tip of the iceberg. The real danger isn’t just what AI remembers or even what it presents as truth; it’s what we risk forgetting, overlooking, or never discovering. Once that happens, it’s no longer just information monoculture; it’s downright cultural fossilisation.

The Second Dimension: Human Cognition – How We Outsource Our Thinking

With the extensive use of AI, we begin outsourcing cognition to machines in ways that slowly erode our abilities. As a result, humans become less capable of understanding, processing, synthesising, and verifying information independently, as well as coming up with new ideas, inventing, and exercising creativity.

Students already struggle to fact-check sources because they’ve never really learned how. They accept AI outputs as authoritative, often without question. Journalists, researchers, even professionals in specialised fields increasingly rely on AI summaries and suggestions. Over time, this creates a cognitive outsourcing cascade. The knowledge we once built through study, debate, and experimentation is gradually handed over to black-box algorithms.

Needless to say, the societal implications are enormous. With AI models becoming more reliable, humans risk losing the skills to spot errors and even the ability to innovate. Moreover, we risk raising generations for whom independent verification, critical thinking, and intellectual risk-taking become optional simply because the AI is assumed to know best. This isn’t just a sci-fi nightmare; it has already started happening in classrooms, workplaces, and labs.

The real threat? Humans will eventually forget how to generate knowledge themselves. So, what now begins as convenience can quickly turn into cognitive atrophy. And this won’t just affect individuals; it could alter how entire fields produce knowledge, make decisions, and evolve.

The Third Dimension: Knowledge – How AI Creates Its Own Echo Chambers

As if the human side of things isn’t bad enough, the AI research world comes with its own challenges. What used to be a place full of new ideas and experiments is slowly turning into a one-way street, with success mostly measured by benchmarks and predictive accuracy. Anything outside that tends to be marginalised.

The irony is hard to miss. As we use recursive feedback to train and improve new AI systems, the same approach that narrowed how AI is defined, measured, and operationalised is repeatedly reinforced and reused. This is what some call “meta-monoculture”, which defines a system that basically keeps making more of the same.

Even though AI will continue to get faster and more accurate, its outputs will increasingly come from the same pool of data. And that means its outputs will no longer surprise or challenge us. Not to mention, over time, AI models will probably be less likely to reveal the blind spots in the logic of other models. So, it’s not just humans who risk getting stuck in a loop; machines do too.

Once these homogeneous AI systems scale across every industry and even our entire society, their limitations will be amplified in ways few can anticipate.

The Fourth Dimension: Systemic Risk – The Cascading Effect of Synchronised Thinking

This is where information monoculture becomes dangerous in ways that are easy to miss. When multiple institutions use similar AI models or rely on the same training data sets, their decision-making systems start to “think” alike. That means if a problem arises, they’re likely to fail in the same way.

Consider monoculture in nature. If you grow one crop species in a field, one disease or one pest could wipe out the entire crop. Now imagine the same principle applied to AI used in critical systems like healthcare, finance, legal, or infrastructure planning. If the AI solutions in all these systems share the same blind spots (or use the same logic), a single mistake could cascade through society, touching every critical field.

As an example, the homogeneous outputs of different LLMs that rely on the same data in stock prediction can create systemic risks in financial markets by giving trading bots identical buy or sell signals. This can inflate prices when everyone buys together, creating bubbles, or trigger crashes when everyone sells simultaneously. This algorithmic “bandwagon investing” can amplify market volatility, triggering significant cascading gains or losses.

In other words, by deploying AI models that use the same datasets, we’re creating cognitive single points of failure. Unlike individual AI mistakes, these failures are systemic. If something goes wrong, the impact could be magnified because the same logic underlies all the AIs. Additionally, because AI rapidly interprets and responds to information, its outputs can ripple through networks at unprecedented speeds. As a result, what once unfolded over days might now happen in minutes.

This is the risk hardly anyone is talking about. But what’s even more worrying is that this is already happening, driven by the fact that the efficiency of standardisation and repeated use of the same datasets makes sense on paper — at least for now.

The Structural Paradox: Monoculture’s Hidden Potential

In this discussion, there’s a twist that often gets overlooked, even though it sits right at the centre of the problem. Monoculture is not always destructive. In fact, handled correctly, it can be productive. Standardised outputs and consistent structures could, in theory, be reused and combined in new ways.

Think of Lego bricks. The pieces are all the same, but what you build with them depends entirely on how you assemble them together. The same idea applies to how AI systems keep drawing on the same sources of information, yet can still produce outputs that are not only accurate but also diverse in form and application.

However, this potential doesn’t unlock itself. It requires active human involvement. Passive use only deepens the monoculture and increases the risk of collapse. But if we engage critically, question outputs, and experiment with recombining ideas, the same systems that seem to limit us could become tools for innovation.

This changes how we should look at information monoculture. We shouldn’t just try to prevent failure; we should start creating within the very structures that AI provides. So, information monoculture itself is not the enemy here. It’s neutral, with its impact defined by how humans choose to engage with it.

So, the good news is that information monoculture isn’t inevitable. With conscious effort, we can prevent it from becoming the default. Because the future isn’t written by AI. In reality, it’s shaped by humans, by all of us, who challenge it, guide it, and occasionally smile when it repeats frozen narratives from the past.

Extra sources and further reading

- Preference-Based Recursive Language Modeling for Exploratory Optimization of Reasoning and Agentic Thinking – Nature

https://www.nature.com/articles/s44387-025-00003-z

This research shows how recursive feedback loops (the model feeding back its outputs into successive refinement steps) can be used during training/inference to help models learn how to improve their own responses. - AI models collapse when trained on recursively generated data — Nature

https://www.nature.com/articles/s41586-024-07566-y

This paper shows how using AI‑generated content in training sets can make models gradually lose touch with the real underlying data, causing them to drift from reality and decline in quality over time. - Here’s Why Turning to AI to Train Future AIs May Be a Bad Idea – ScienceNews

https://www.sciencenews.org/article/ai-train-artificial-intelligence

This article explores the risks and challenges of training AI models on content generated by other AI systems. It highlights how relying too heavily on synthetic data can degrade model quality over time, amplify biases, and reduce diversity, potentially leading to “model collapse”.