The Dangers of AI Exceptionalism and

Regulatory Delay

When we reflect on what makes us human, we often point to traits like creativity, complex problem-solving, abstract thinking, and moral reasoning. These capacities have long felt deeply personal and uniquely ours.

Yet today, these once-distinct markers of humanity are being challenged by large language models (LLMs), which are beginning to display surface-level behaviours that resemble those very qualities. Some of these systems can even simulate empathy and emotional understanding in ways that feel surprisingly convincing. This has led us to ask whether intelligence—not only intellectual but also emotional— is truly exclusive to humans, as many still believe.

Seen in this light, it’s more important than ever to reflect on how we relate to intelligent systems, both from ethical and legal standpoints. One of the key questions is whether AI should be treated as an “exception” to normal ethical and legal standards, or whether its development and use should be subject to strict regulation. Quite worryingly, a new notion has already emerged: AI exceptionalism, which is the belief that artificial intelligence is so new, powerful, or complex that it shouldn’t be constrained by existing legal frameworks.

This way of thinking has paved the way for the release of open-source models, like Meta’s LLaMA and Stability AI’s Stable Diffusion, with minimal oversight, no global standards, and limited accountability for their use or misuse. But should AI really be beyond the reach of the law? Let’s answer this question by exploring the potential risks of permitting further development and deployment of AI without clear legal boundaries.

The Main Risks of Unregulated AI

Without proper regulation, AI poses serious risks to society, such as:

- Unchecked Discrimination and Bias

Unregulated AI systems can replicate and even amplify human biases, which can have a negative impact, particularly in sensitive areas like recruitment, law enforcement, credit decisions, and political affairs. This can lead to widespread discrimination against already marginalised groups, worsening existing inequalities. - Privacy Violations

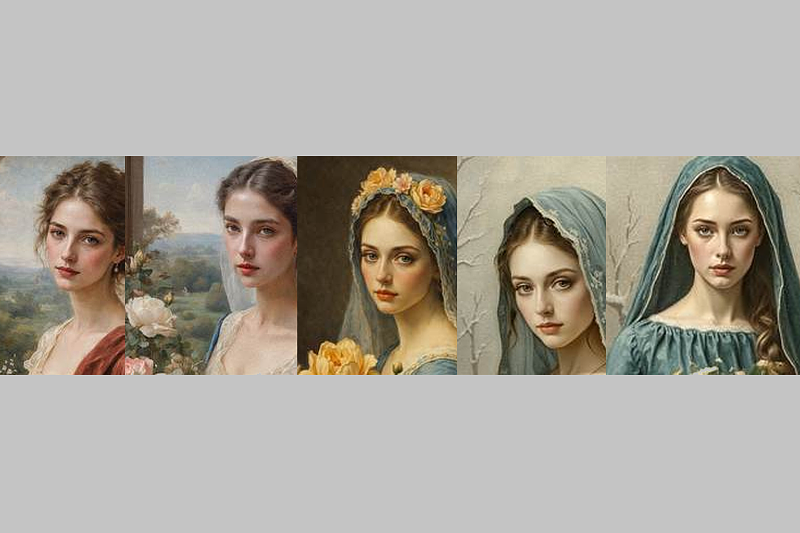

AI models rely heavily on data, much of which is personal and sensitive. What’s more, companies often collect this data without proper consent. Without legal safeguards in place, there’s no guarantee they’ll handle it responsibly, meaning people’s private information can be misused, exploited, leaked, or stolen. - Copyright and Ownership Issues

AI systems often rely on large datasets that may include copyrighted material, raising concerns about the use of creators’ work without permission and the unclear ownership of AI-generated content. - Erosion of Trust

If people don’t understand how AI makes decisions or perceive the technology as unaccountable, trust in both the tech itself and the organisations using it may decline. - Misuse by Malicious Actors

Generative AI systems can be exploited to create fake news, deepfakes, and harmful content. Without regulation, it’s all too easy for bad actors to exploit AI to spread misinformation, incite violence, or damage reputations. - Normalisation of Surveillance and Manipulation

AI enables companies and platforms to monitor behaviour, predict choices, and even influence actions. Over time, this can shape public opinion, alter purchasing habits, or even manipulate vulnerable individuals in moments of weakness. - Consolidation of Power and Monopolies

A small number of tech giants dominate AI development and data assets. Without intervention, their grip could tighten, making it difficult for new players to enter the market. Not only could this give too much influence to a few corporations, but it could also stifle innovation. - Workplace Exploitation and Surveillance

In the name of efficiency, some organisations already use AI to monitor employees and track productivity. When this is done excessively or without consent, it can create a dehumanising environment that might undermine workers’ dignity and autonomy. - Threats to Democracy

AI-driven algorithms have already been used to promote biased content and even misinformation, fuelling echo chambers and polarised debates. Such manipulative tactics can fracture society and hinder the constructive political discourse employed in democratic processes. The resulting fragmentation can undermine social cohesion and hinder constructive political discourse (pdf), both vital to a healthy democracy. - Lack of Accountability when Things Go Wrong

Without clear rules, it’s often unclear who is responsible when an AI system causes harm, whether through biased decisions, privacy breaches, or financial losses. Legislation is needed to clarify liability and ensure victims have a path to justice. - Loss of Control over Innovation

Unregulated AI development may prioritise profit over the public good, potentially leading to systems that serve corporate interests instead of society. Policies are essential to guide innovation in ways that benefit us all. - Security Threats and Physical Harm

AI systems can be used to design weapons or develop harmful materials, including explosives. When companies fail to prevent these uses, public safety is put at serious risk.

A few more key issues that highlight the urgent need for AI regulation include the risk of bias caused by a lack of diversity in AI development teams, ethical concerns around autonomous decision-making, and the environmental impact of energy-intensive data centres.

Major technological breakthroughs have always pushed legal systems to adapt. From the Industrial Revolution to the digital age, each big shift has forced lawmakers to reconsider how best to protect people’s rights and prevent misuse, even abuse. As technology continues to evolve, so too must the laws that govern it, especially as new ways of working and interacting challenge old rules.

As Niall Ferguson pointed out, the rule of law wasn’t just a feature of Western development; it was one of its driving forces. He described it as a kind of “killer app” that protected property, enabled markets, and created the conditions for scientific and economic growth. If the law played such a vital role in past revolutions, it’s worth asking why we’d leave it out now, just as AI begins to reshape the world around us.

While all of the above make a compelling case for immediate AI regulation, it’s important to note that not everyone agrees. Some argue that regulation may stifle innovation, hinder competitiveness, or be premature in such a rapidly evolving field. For a balanced perspective, the next article delves into the counterarguments and explores why many believe that AI development and use deserve the freedom to progress without legal constraints—for now at least.