Key Takeaways

- AI agents act independently, making decisions and taking actions without constant human guidance.

- Agentic AI combines multiple agents to tackle complex, multi-domain goals.

- Reinforcement learning lets agentic AI adapt and optimise through trial and error.

- Agentic AI systems generate outcomes that no single AI could achieve alone.

- The rise of agentic AI challenges us to address ethics, governance, and coexistence with autonomous AI systems.

In a recent interview, Yuval Noah Harari said: “People think of it [AI] as an acronym for artificial intelligence. But with each passing year, AI is becoming less and less artificial. Yes, we write the code, but it’s the first technology in history that can create new ideas by itself, so it’s not really a tool, it’s more like an agent.”

The words above capture the shift we’re witnessing right now, which is a move from “traditional”, rule-based AI systems to more autonomous AI agents capable of acting on their own to achieve specific goals. In this blog post, we’ll explore what AI agents and agentic AI really are, how they differ, and why they mark a major step towards more independent forms of intelligence.

What Is an AI Agent?

Unlike traditional software that waits for precise instructions and constant human intervention, an AI agent is designed to perceive its environment, make decisions, and take actions on its own, based on the specific goals, rules, and contexts defined by its creator.

What makes AI agents distinct from previous software systems is their ability to adapt. Instead of simply following static scripts, they continuously evaluate context and new information, responding in real time within the boundaries of the objectives they were programmed to achieve.

While some AI agents are simple rule-based bots that operate on predefined rules and handle specific, predictable tasks with limited autonomy, others are more complex and can evolve through experience. A customer service chatbot that learns from interactions to improve its responses or a trading algorithm that adapts its strategies based on market conditions are examples of AI agents that combine goal-directed behaviour with autonomy. To learn more about how AI agents work and what types are currently available, read our blog post “AI Agents: What They Are, What They Can Do, and Why You Should Care”.

What Is Agentic AI?

Agentic AI takes the concept of an AI agent a step further. Composed of multiple specialised AI agents, an agentic AI system is capable of pursuing certain goals while also planning, generating strategies, and operating across multiple domains to achieve objectives with minimal human intervention.

Unlike regular AI agents, which are usually tied to specific tasks or environments, agentic AI systems have a higher level of autonomy and can act in more open-ended ways, potentially delivering solutions that their creators hadn’t explicitly envisioned. This is possible because agentic AI systems not only can proactively engage with the context in which they operate but also access external tools, databases, and the internet to find the information they need to complete tasks.

How Agentic AI Systems Work

In short, an agentic AI system works by combining multiple layers of machine learning, including natural language processing, and decision-making frameworks to pursue goals autonomously. At its core, it uses different AI models that can evaluate options, predict outcomes, plan sequences of actions across different contexts, and then act on them.

Reinforcement learning often plays a key role in such systems, enabling them to generate different strategies based on the goals they need to achieve and then determine, through trial and error, which approaches lead to success. In this way, agentic AI “learns” how to adapt to different situations and coordinate actions across multiple tasks, effectively creating its own strategies within the boundaries of its programmed objectives.

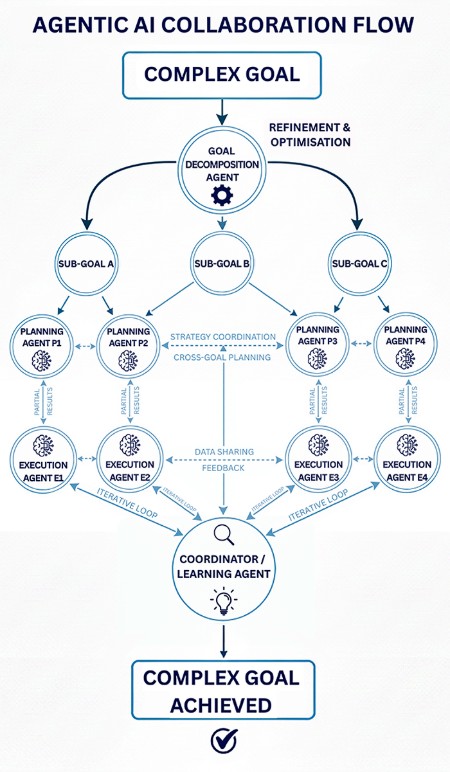

A more interesting aspect, however, is how AI agents are connected within an agentic AI system to make all of this possible. Currently, there is a series of connectivity blueprints that link AI agents in certain ways so they can share information and synchronise their actions efficiently. Thanks to this interconnection framework, the AI agents create a system that is greater than the sum of its parts. Common interconnection methods include:

- Centralised orchestration: In this model, a central coordinator manages communication, resolves conflicts, and directs the flow of information between agents. This approach ensures a unified strategy but can become a bottleneck if the central node fails or becomes overwhelmed.

- Decentralised peer-to-peer networking: Agents communicate directly with one another, negotiating actions and sharing data without relying on a central authority. This method enhances robustness and scalability but requires sophisticated protocols to handle conflicts and ensure consistency.

- Shared memory or knowledge graphs: Agents maintain a common repository of information, ensuring that all system members have access to relevant data and can make informed decisions. This shared context is crucial for coordinated actions and prevents redundant efforts.

- Communication protocols and channels: Structured messaging systems, APIs, or event-driven channels allow agents to exchange instructions, predictions, and feedback efficiently. Standardised protocols ensure interoperability and facilitate seamless integration of new agents into the system.

- Iterative feedback loops: Agents continuously monitor outcomes, share observations, and adjust their behaviour, allowing the system to adapt dynamically to changes in the environment. This adaptability is key to maintaining optimal performance in complex and evolving scenarios.

- Hierarchical structures (nested agents): This model combines aspects of centralised and decentralised control. A high-level “Super-Agent” or coordinator delegates tasks to lower-level, specialised agents, which then report their progress back up the chain. This is vital for breaking down massive, complex goals into manageable sub-tasks.

These interconnection methods can be combined to create sophisticated agentic AI systems. When several methods are integrated, the result is a hybrid architecture that leverages the strengths of each method to manage the system’s complexity and ensure enhanced contextual awareness, scalability, and robustness.

A good example is a financial trading agentic AI system, in which one agent analyses market trends, another evaluates risk, and a third executes trades. By collaborating, operating in the same context, and adapting over time, the three AI agents can make complex, goal-directed decisions that no single agent could achieve on its own.

The image below illustrates the core parts of an agentic AI system and how the flow of communication works.

Similarities and Differences

Here is a comparison of the main similarities and differences between individual AI agents and agentic AI systems.

| Characteristic | AI Agent | Agentic AI System |

|---|---|---|

| Structure | Usually operates individually. | Involves multiple agents working together. |

| Behaviour | Doesn’t inherently produce emergent outcomes. | Exhibits emergent outcome behaviour through the interaction of agents. |

| Objective | Focuses on a narrower objective (often simpler or short-term). | Can pursue complex, long-term goals across multiple tasks. |

| Resource access | May have limited access to external tools, databases, and the internet. | Can access multiple external tools, databases, and the internet collaboratively. |

| Adaptation | Usually adapts within a single task or context. | Can adapt iteratively across multiple tasks and contexts. |

| Governance and control | Relies solely on its internal decision-making model. | Requires a sophisticated orchestration layer to manage communication, allocate resources, and resolve internal conflicts. |

| Shared fundamentals | Designed to perform tasks autonomously or semi-autonomously. Relies on algorithms, data, and computational models to make decisions. Can process information and adapt based on feedback. | Shares all fundamentals.. |

Agentic AI is still in its infancy, yet we’re already seeing companies build multi-agent systems that can plan, adapt, and collaborate in ways we’ve never thought possible. Looking ahead, imagine a world where millions of these autonomous intelligences interact across every domain. This rise won’t just unlock incredible innovations; it could also provoke a profound societal shift. So, we cannot help but wonder how these autonomous systems will affect everything, from our economies to our culture. As they become more independent and creative, one of our most critical tasks will likely be figuring out the ethical frameworks and safeguards needed to responsibly manage a future powered by agentic AI.

Extra Sources and Further Reading

- Agentic AI: 4 reasons why it’s the next big thing in AI research – IBM

https://www.ibm.com/think/insights/agentic-ai

This paper defines Agentic AI as the next major development in AI research, emphasising its ability to autonomously plan, adapt, and use external tools by combining the flexibility of large language models (LLMs) with the precision of traditional programming. - The future of work is agentic – McKinsey & Company

https://www.mckinsey.com/capabilities/people-and-organizational-performance/our-insights/the-future-of-work-is-agentic

How close is the “digital workforce”? This article argues that the future of work is agentic, asserting that companies must prepare immediately for a workforce where human employees collaborate with highly autonomous AI agents that act as a “digital replica” of organisational staff. - Organizations Aren’t Ready for the Risks of Agentic AI – Harvard Business Review

https://hbr.org/2025/06/organizations-arent-ready-for-the-risks-of-agentic-ai

This article discusses how the increasing autonomy and complexity of AI agents pose significant challenges to traditional risk management frameworks, urging organisations to rethink their approaches to AI governance. - Build and orchestrate enterprise-grade multi-agent experiences – Google Cloud

https://cloud.google.com/products/agent-builder

Vertex AI Agent Builder is a Google Cloud platform that enables developers to create, deploy, and manage multi-agent AI systems that can collaborate, access enterprise data, and perform complex tasks across domains with minimal code.