Is AI Exceptional After All?

For years, AI has been quietly evolving behind the scenes. However, it’s now increasingly taking centre stage, transforming business, government, and public sectors in profound ways. While in some cases, AI’s role remains relatively modest, such as generating written content or powering chatbots, AI systems are steadily taking on more impactful roles, ranging from identifying individuals through biometrics to assisting doctors with diagnoses.

Despite AI’s vast potential to benefit society across numerous sectors, a handful of big tech companies have gained considerable influence over how the technology is developed, deployed, and used. This concentration of power raises concerns that AI’s trajectory may increasingly reflect certain corporate or political interests that don’t always align with the public good. In this context, regulating AI appears not only reasonable, but necessary.

The European Union was the first to develop and adopt an AI Act. However, the question of how (or even if) AI should be regulated on a global scale remains a subject of intense debate. In fact, some influential voices argue that the case for AI regulation is currently quite weak and see the current calls for regulation as premature.

Following on from our previous blog post, let’s go through some of the common arguments made against regulating AI. Only by examining these points can we gain a clearer understanding of whether imposing regulatory measures is truly the right path forward.

Arguments Raised Against AI Regulation

A global regulatory framework remains a distant prospect mainly because several major hurdles must be overcome before it can become a reality. The key challenges slowing progress on wider AI regulation include, but are not limited to:

- The Risk of Hindering Innovation: Since excessive regulation has the potential to slow down progress and discourage the development of AI technologies, striking the right balance between oversight and innovation is no easy task. Some AI experts worry that overly restrictive rules might push talent and investment towards countries with more accommodating regulatory environments, potentially undermining a nation’s competitiveness in the global AI race.

- Competitive Disadvantage: Stringent regulation could place companies at a disadvantage compared to firms in countries with a more relaxed AI regulatory framework. For instance, China’s permissive legislative approach has significantly bolstered its domestic AI sector, allowing it to become one of the most advanced countries in this industry. Furthermore, the Chinese government is deeply involved in various aspects of AI development, offering substantial support across research, funding, and the implementation of AI technologies.

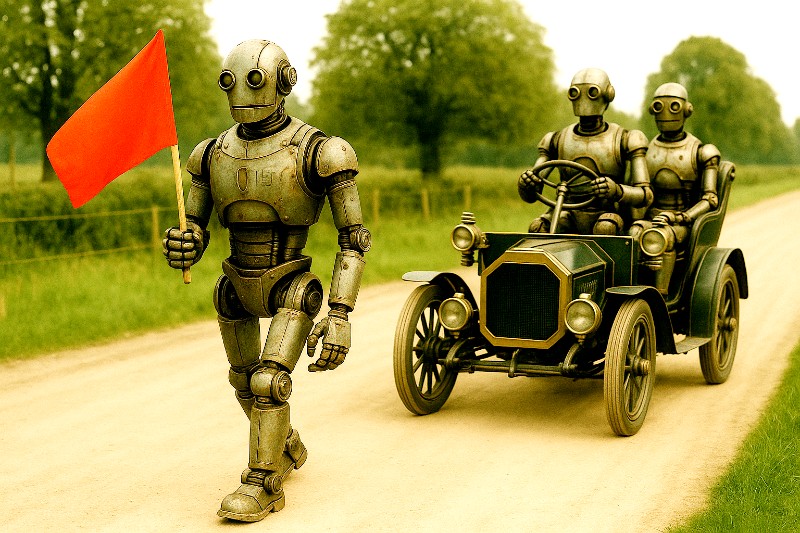

- Rapid Technological Advancement: Currently, AI technology is advancing so quickly that lawmakers may struggle to keep up. As a result, regulations risk becoming outdated before they’re even enforced. For rules to be effective, they must be flexible enough to evolve alongside the technology while providing clear, practical guidance.

- Market-Driven Responsibility: Some industry leaders advocate for minimal government intervention in AI, suggesting that free-market competition and open-source collaboration can effectively guide responsible AI development and even use. For example, Luther Lowe of Y Combinator opposes heavy-handed regulation and supports open markets to foster innovation, particularly in AI. Similarly, Meta’s chief AI scientist, Yann LeCun, promotes global open-source AI models, emphasizing that national regulations should support, not hinder, such initiatives.

- Lack of Global Consensus: Different approaches to AI regulation across countries can create confusion and make international cooperation more difficult. Some governments may prefer to wait for a global agreement before introducing their own rules.

- Limited Expertise within Governments: The complexity and rapid development of AI systems make it difficult for regulators to fully understand how they work, where they can be applied, and the risks they pose. Without sufficient specialised knowledge, there’s a real risk that regulations will be impractical or fail to address key concerns.

- Unforeseen Consequences: Regulations designed to tackle specific AI risks might unintentionally slow down research efforts or restrict valuable uses. Since anticipating such side effects is challenging, it remains uncertain whether AI regulation will allow society to fully benefit from AI’s true potential.

Some industry leaders argue that regulating AI may be premature, given the technology is still evolving and its long-term implications remain uncertain. However, oversight is essential in order to ensure its responsible development and use. That said, creating effective regulation is far from straightforward—especially considering that AI is designed to operate globally, yet regulatory approaches vary widely across countries and defining shared ethical standards remains a significant challenge.

Ultimately, transparent oversight combined with robust quality assurance and ongoing dialogue between governments, AI experts, and society will be key to harnessing AI’s benefits while minimising risks. It’s clearer now than ever that the path forward requires flexibility, collaboration, and a shared commitment to responsible AI innovation, development, and use.