Key Takeaways

- Consent no longer guarantees control: Even if you refuse to share your data, others’ actions can expose personal information about you.

- Your data can be inferred: Modern algorithms can predict personal traits, preferences, or relationships based on data from people similar to you, meaning privacy isn’t an individual choice anymore.

- Facial recognition works beyond accounts: Platforms can recognise and profile you from tagged photos uploaded by others, even if you never use social media yourself.

- Consent mechanisms are broken by design: Apps and platforms often nudge users into agreeing to invasive permissions through dark patterns, rewards, and functionality locks.

- Privacy must become collective: Protecting personal information now requires community-level safeguards, ethical design, and shared accountability, not just individual consent.

The other day, I installed a new app on my phone and accepted the permissions without even looking through them. Why? Because if I hadn’t granted those permissions, I couldn’t really use the app to its full potential — or at all, for that matter.

And it’s not just apps, is it? Have you ever tried visiting a website, clicked “Reject All” on the cookie banner, only to be told you can’t access the content unless you accept? Or been forced to choose between accepting everything and spending minutes navigating through confusing options? This kind of makes all the debate about consent a bit pointless, doesn’t it?

But that’s when I stopped and asked myself: do we actually have a real choice here, as the law says we should? Or are we just ‘forced’ to agree to all kinds of terms and conditions just to access certain products or services? That’s not all, though, as this also got me thinking about how our data is shared without our consent in countless situations.

Here are a few hypothetical, eye-opening scenarios that may give you something to think about:

- A friend uploads their entire phone contact list to a social media app. What do you think would happen? I’ll tell you: your name, phone number, and social connections can be profiled without your permission.

Now, things are about to take a bizarre turn:

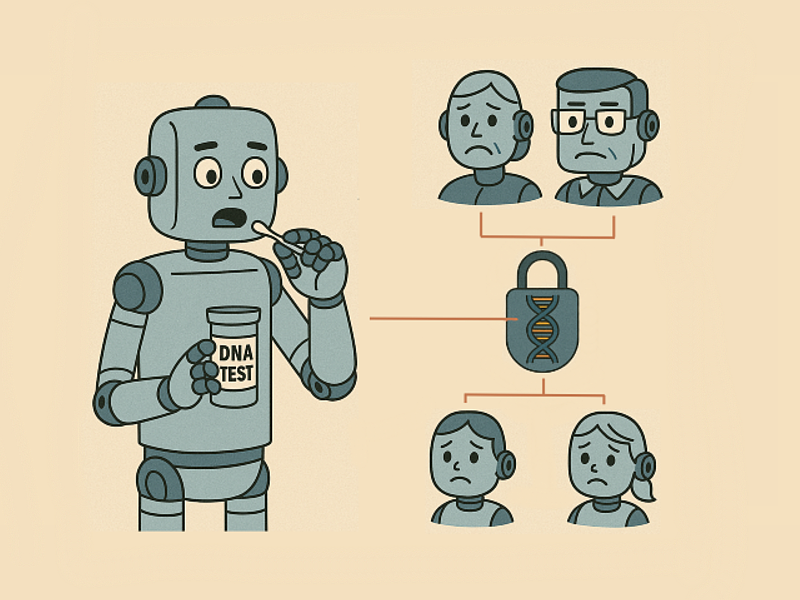

- One of your relatives uploads their DNA to a genetic testing platform that allows sharing results or analysing genetic connections. In that case, some of your genetic information could be inferred and stored on a server, without anyone asking for your consent.

How is that even possible? Put simply, because the relative’s sample contains parts of your DNA, the platform can derive some of your genetic profile even though you never submitted your own sample and never agreed to it.

Along the same lines, what if:

- A neighbour installs a facial-recognition doorbell that records and analyses your face each time you walk past their house?

- A friend uses a smart home assistant that records ambient audio, capturing your voice and personal conversations during your visits?

- A colleague links their shopping loyalty card to online profiles, letting algorithms make predictions about your lifestyle and spending habits simply because you shop together?

Do you want more examples? Let’s consider these workplace scenarios:

- A colleague forwards an email thread to a productivity AI tool, turning your messages into training data for a system you never consented to.

- An employee could upload company documents to an AI writing platform, exposing confidential information about clients, partners, and staff to a dataset none of them approved.

Obviously, the list could go on and on. But I think you’ve got the idea. Still, I have one more ‘what if’ scenario: what if someone tags you in photos on Facebook or Instagram? The answer might shock you. Algorithms can map your face, appearance, and relationships, EVEN IF YOU DON’T USE SOCIAL MEDIA. Yes, you’ve read that right.

In case you didn’t know, modern facial recognition systems don’t need you to have an account of your own. When someone uploads a photo and tags you, the platform learns what you look like by analysing your facial features. Over time, as more photos are shared, the system becomes better at recognising you automatically, spotting patterns in who you’re with, where you appear, and how you age. In other words, your identity can be profiled through other people’s data, even though you never chose to participate.

Note: Meta shut down facial recognition in 2021 and deleted over 1 billion face templates after lawsuits and regulatory pressure. However, the company has resumed testing facial recognition technology in 2024, focusing mostly on combating scams and assisting in account recovery.

What ties all these scenarios together is that your personal data, ranging from facial biometrics and shopping preferences to contact lists and private conversations, can be captured, stored, and analysed at any time, by any system or person with access to it. And the most unsettling part is that they don’t need your permission. Your digital identity is being constructed piece by piece, not through your own choices, but through decisions of others, who may not even realise the implications of what they’re sharing.

However…

Your Data Can Be Predicted, Even if No One Shares It

Another unsettling truth is that modern algorithms don’t actually need your data to know things about you. They can infer personal traits based on patterns found in people who are similar to you:

- Location data from other phones in your neighbourhood can reveal demographic traits, income levels, or even political leanings you never disclosed.

- Purchase histories from friends you shop with can be used to predict your preferences, lifestyle choices, and even health conditions.

- Social graph analysis (which focuses on who you know, rather than what you say) can reliably estimate your interests, personality type, or future behaviour.

In other words, even if you personally choose privacy, others’ behaviour can fill in the gaps. As AI gets better at reading between the lines, managing your own privacy becomes nearly impossible because it no longer depends only on what is shared but also on what can be deduced about you.

But isn’t this a violation of privacy, or more precisely, unauthorised use of personal data? You bet it is.

Unfortunately, simply knowing it’s a violation doesn’t change much. You can refuse to use social media, decline genetic testing, and read every privacy policy cover to cover. It still won’t matter. Why? Because you can’t stop others from sharing information about you, and you can’t keep systems from collecting data either.

Ironically, the whole idea of individual consent assumes you have control over your own information. But clearly, you don’t. Not really. Or rather, not in a world where you can’t control what your neighbour’s doorbell records or what your friend shares online. So, perhaps the real question is no longer how we protect our individual data, but whether we need to rethink the entire concept of consent itself. What if consent is no longer meant to be individual, but collective?

Why Individual Consent Has Failed Us

For years, big tech has framed privacy as a matter of personal responsibility: read the terms, review the permissions, decide for yourself. But this idea is crumbling right now. Why? Because individual consent simply was never designed for a world where information is shared, inferred, and cross-linked at massive scale.

To make things worse, besides other people’s data choices affecting us, platforms use all sorts of rewards to manipulate our decisions. Loyalty points, discounts, and extra features are just a few incentives encouraging us to hand over far more data than we might want. Many studies show that many people willingly trade their personal data for minimal benefits, even though most of them claim to care about privacy.

The issue isn’t necessarily that people are careless. It’s that consent is typically required in environments designed to nudge people towards saying ‘yes’ without thinking, often through dark patterns and psychological pressure. And once one person gives in, the privacy of everyone around them is pulled into the system too.

The Path Forward: Privacy Isn’t Dead, It’s Evolving

Since the old model of individuals managing their own privacy no longer works, we need new frameworks that protect not just isolated users, but entire groups, communities, and networks. After all, one person’s choice can affect many others, which is precisely why we need to shift the conversation from individual choice to collective data protection.

The good news is that this shift is already starting to take shape. From algorithmic impact assessments under the EU AI Act to core principles like purpose limitation and data minimisation, organisations are being pushed to collect only the information they truly need and nothing more.

At the same time, emerging ideas like data collectives offer a promising path forward. In these models, communities can pool their data and govern it on their own terms, deciding how it’s shared and used. This bottom-up approach not only supports initiatives like citizen science and open data but also makes it possible to train AI systems that reflect shared community values rather than corporate incentives.

Therefore, the point is clear: privacy isn’t disappearing. Instead, it’s evolving into something more nuanced, something that finally recognises what data has become: namely, a collective asset with collective consequences.

Extra Sources and Further Reading

- The Ethics of Facial Recognition Technologies, Surveillance, and Accountability in an Age of Artificial Intelligence – PubMed Central

https://pmc.ncbi.nlm.nih.gov/articles/PMC8320316/

This article examines how facial-recognition systems raise ethical, legal and regulatory challenges, especially in the US, UK and EU, highlighting problems of privacy, bias and accountability in widespread deployment of the technology. - Facial Recognition Technology in the Market: What Consumers Need to Know to Protect Their Rights – UC Law https://repository.uclawsf.edu/cgi/viewcontent.cgi?article=1141&context=hastings_science_technology_law_journal

This paper explores how advances in science and technology, such as AI, data analytics or biometric systems, are challenging existing legal frameworks around privacy, consent and accountability. - Shining a Light on Dark Patterns – Research Gate

https://www.researchgate.net/publication/

350340175_Shining_a_Light_on_Dark_Patterns

In this study, the authors provide experimental evidence showing how ‘dark patterns’ in user-interfaces manipulate consumers into unwanted choices and examine how existing laws might address these deceptive design practices. - Predictive Privacy: Collective Data Protection in the Context of AI and Big Data – Rainer Mühlhoff

https://rainermuehlhoff.de/media/publications/m%C3%BChlhoff_preprint-2022_predictive-privacy-and-collective-data-protection.pdf

This paper argues that modern predictive analytics undermine traditional individual data privacy by inferring sensitive information about people using others’ data, and proposes a shift to collective data-protection frameworks.