Disclaimer

The following content is intended for informational purposes only and should not be interpreted as legal advice. If you have specific questions about your rights, obligations, or risks when using or developing open-source AI tools, consult a qualified legal professional.

What would happen if anyone could build a powerful AI system with no oversight, no rules, no restrictions, no safeguards, and no real limits? The answer is simple: unthinkable chaos. And yet, open-source AI platforms make it possible for almost anyone to build powerful “digital minds” and, so far, we haven’t seen any catastrophic events. But that doesn’t mean the risks aren’t real. In fact, many of them are hiding in plain sight.

In some of our previous blog posts, we explored the legal vacuum surrounding AI alongside the growing global calls for regulation and the uphill battle to reach a worldwide consensus. From the EU’s first steps with the AI Act to Silicon Valley’s push for open markets and innovation, one thing has become clear: the world is struggling to keep pace with AI’s rapid development.

But now, a new and more urgent concern is taking shape; namely, the rise of open-source AI. While open access to technology has long been celebrated as a driver of progress and collaboration, when complex, highly capable AI models are freely shared online, the line between widespread access and high-stakes risks becomes alarmingly thin. This is primarily because powerful AI tools are currently accessible to hobbyists, startups, and even malicious actors, often with no safety guardrails in place.

While the question of how AI should be regulated is still very much a concern, there’s also a growing debate over whether open-source AI technologies could be undermining the very principles of legality, safety, accountability, and ethics that we’re working so hard to put in place. Join us as we explore this issue in more detail in the rest of this blog post.

Open-Source AI: Unlocking Innovation While Navigating Risks

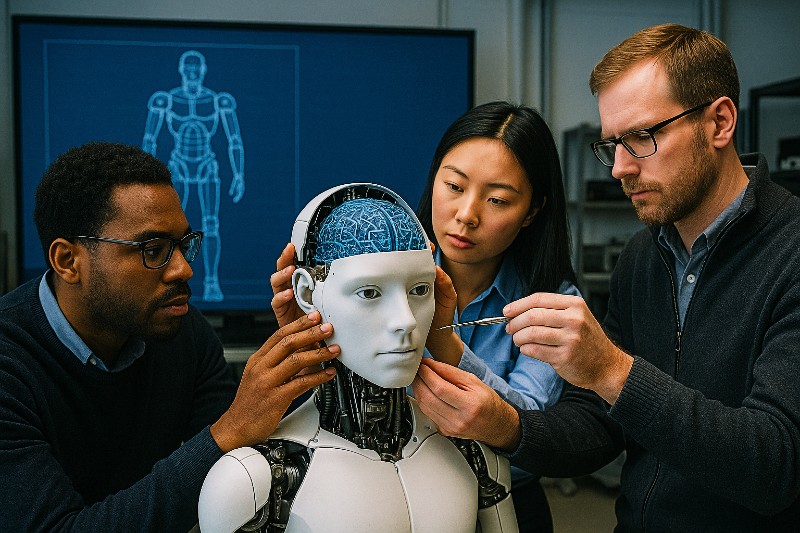

Open-Source AI refers to artificial intelligence models and tools that are made freely available to the public, encouraging accessibility, transparency, and collaboration. Examples of platforms with openly accessible source code and, in some cases, the model’s learned parameters (also known as weights) include Meta’s LLaMA, Stability AI’s Stable Diffusion, IBM’s Node-RED, as well as Budibase and Appsmith.

While these platforms promote decentralisation and enable rapid innovation, they also raise concerns about safety risks and potential misuse, making the balance between openness and control a critical challenge. Now, let’s take a look at the risks of open-source AI that everyone should consider.

Legal Challenges in Open-Source AI Development

Many AI systems are currently trained on extensive datasets sourced from across the internet, including open-source code hosted on platforms like GitHub. This raises an important question: Does using open-source code in training data, and subsequently generating new code based on that training, violate the terms of the applicable licenses?

Open-source licenses, such as the GNU General Public License, also referred to as GPL, often require that any modified versions of the original work, whether that’s a piece of source code or a complete software programme, carry the same license or include proper attribution. However, it remains unclear whether AI-generated code qualifies as derivative work or is a form of reuse that would require following those licence rules.

Without clear legal precedent, developers face ambiguity over what counts as fair use or a license violation. Ongoing lawsuits, like the GitHub Copilot IP Litigation, illustrate how legally complex and contested this issue has become, with potential ramifications for how AI-generated code must comply with open-source terms.

Beyond licensing issues, AI-generated code raises broader copyright concerns, including risks under laws like the DMCA. This risk largely results from a lack of transparency by platforms offering AI-generated code about the sources of their training data, making it difficult to ensure compliance with relevant laws.

The uncertainty around open-source licensing and copyright law creates two major legal risks for AI developers:

- Violating licence or copyright laws simply by using or distributing AI-generated code – Because AI models learn from large datasets that may include copyrighted or licensed material, any code they generate might unintentionally reproduce copyrighted content or derivative works without proper permissions, which could fall outside legal boundaries. For example, if a piece of code generated by an AI resembles a GPL-licensed snippet closely enough, it could be legally considered derivative work, requiring the developer to release their code under the same licence.

- Facing costly legal disputes due to unclear rules – The absence of solid legal precedent means developers, companies, and AI platforms can become targets for lawsuits from copyright holders or open-source communities. Litigation can be lengthy, expensive, and damaging to reputations, especially for smaller developers or startups. Lawsuits like the GitHub Copilot case show how intellectual property holders might choose to take legal action against the companies, developers, or individuals who use open-source platforms in AI development. Until laws clarify how licences apply to open-source AI and its output, users may face unpredictable legal and financial risks.

Not only can this lack of clarity affect developers from legal and ethical standpoints, but it may also have wider implications. Organisations and developers may be reluctant to adopt or invest in AI technologies if they fear that using AI-generated code could lead to licence violations or copyright infringement. This hesitation can slow down innovation and limit the potential benefits open-source AI tools might otherwise offer. Companies operating in regulated sectors may be particularly cautious, as compliance failures could result in fines or increased regulatory scrutiny. Without clear legal frameworks, the legal ambiguity creates a barrier that could hinder the wider adoption of open-source AI technologies.

What Everyday Users Should Know About Open-Source AI

By making powerful tools accessible to everyone, open-source AI is transforming how artificial intelligence is used and developed. Because this openness encourages innovation and broadens participation, regular users can contribute to improving models, increasing transparency, and preventing monopolies. However, for everyday users, open-source AI also carries important risks that should not be overlooked. Some key concerns include:

- Security risks – Because open-source AI tools are publicly available, malicious actors can misuse them to create deepfakes, automate cyberattacks, or commit biometric fraud. Unlike proprietary AI, which is often tightly controlled, open-source models may lack robust safeguards against abuse. This makes users vulnerable to misinformation and identity theft, among other harms.

- Quality and reliability issues – Open-source AI often lacks consistent standards for data provenance and model training, raising questions about accuracy and compliance, especially with data privacy laws like GDPR. Users may unknowingly rely on flawed or biased outputs that could lead to unfair or harmful decisions.

- Unclear accountability – When harm arises from an open-source AI model, determining who is responsible can be complicated since many contributors and decentralized governance are involved. This legal ambiguity creates risks for end users who may trust and rely on those tools.

- Copyright and ethical challenges in AI-generated art – In the realm of AI-generated art, open-source models can produce impressive creative works but also raise copyright and ethical issues. Since training data may include copyrighted images without clear licenses, AI-generated art can unintentionally infringe artists’ rights, putting users at risk of legal disputes.

As the future of AI unfolds before our eyes, organisations, developers, and everyday users should be aware of security vulnerabilities, unclear liability, ethical challenges, and potential quality gaps when using open-source AI platforms. Careful validation, understanding licence terms, and staying informed about AI governance as well as changes in copyright law and how it may apply to their work are key to safely benefiting from open-source AI technologies while respecting the rights of original creators and adhering to all legal requirements.